Notes from "Fundamentals of Data Engineering": Data Engineering Described

Data Engineering Described

What is Data Engineering?

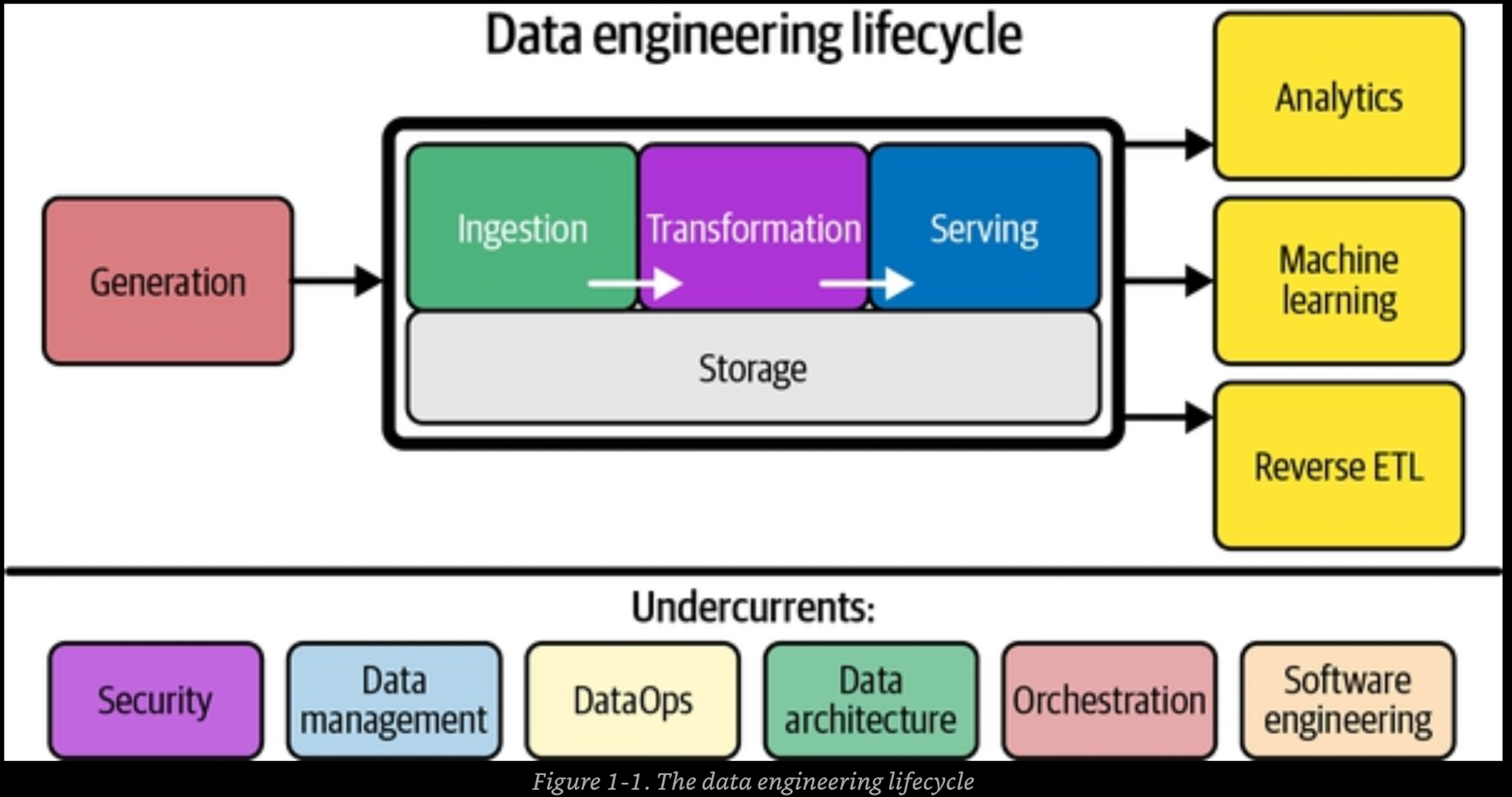

Data Engineering is a set of operations aims at creating interfaces and mechanisms for the flow and access of information. Data Engineering is all about the movement manipulation and management of data. Data Engineering is the development, implementation, and maintenance of systems and process that take in raw data and and produce high-quality and consistent information that supports down stream use cases, such as analysis and machine learning. Data Engineering is the intersection of security, data management, data ops, data architecture, orchestration and software engineering. Data Engineers manage the manage the above mentioned lifecycle.

The Data Engineering Lifecycle

The stages of the data engineering lifecycle are as follows:

Generation

Storage

Ingestion

Transformation

Serving

The data engineering lifecycle also has a notion of undercurrents—critical ideas across the entire lifecycle. These include:

Security

Data Management

DataOps

Data Architecture

Orchestration

Software Engineering

The Early Days 1980-2000

Data warehousing ushered in the first age of scalable analytics, with new massively parallel processing (MPP) databases that use multiple processors to crunch large amounts of data coming on the market and supporting unprecedented volumes of data.

The Early 2000's: The Birth of Contemporary Data Engineering

Coinciding with the explosion of data, commodity hardware—such as servers, RAM, disks, and flash drives—also became cheap and ubiquitous. Several innovations allowed distributed computation and storage on massive computing clusters at a vast scale. These innovations started decentralizing and breaking apart traditionally monolithic services. Another famous and succinct description of big data is the three Vs of data: velocity, variety, and volume.

The 2000's and 2010's: Big Data Engineering

Traditional enterprise-oriented and GUI-based data tools suddenly felt outmoded, and code-first engineering was in vogue with the ascendance of MapReduce. Despite the term’s popularity, big data has lost steam. What happened? One word: simplification. Despite the power and sophistication of open source big data tools, managing them was a lot of work and required constant attention. Often, companies employed entire teams of big data engineers, costing millions of dollars a year, to babysit these platforms. Big data engineers often spent excessive time maintaining complicated tooling and arguably not as much time delivering the business’s insights and value.

The 2020s: Engineering for the Data Lifecycle

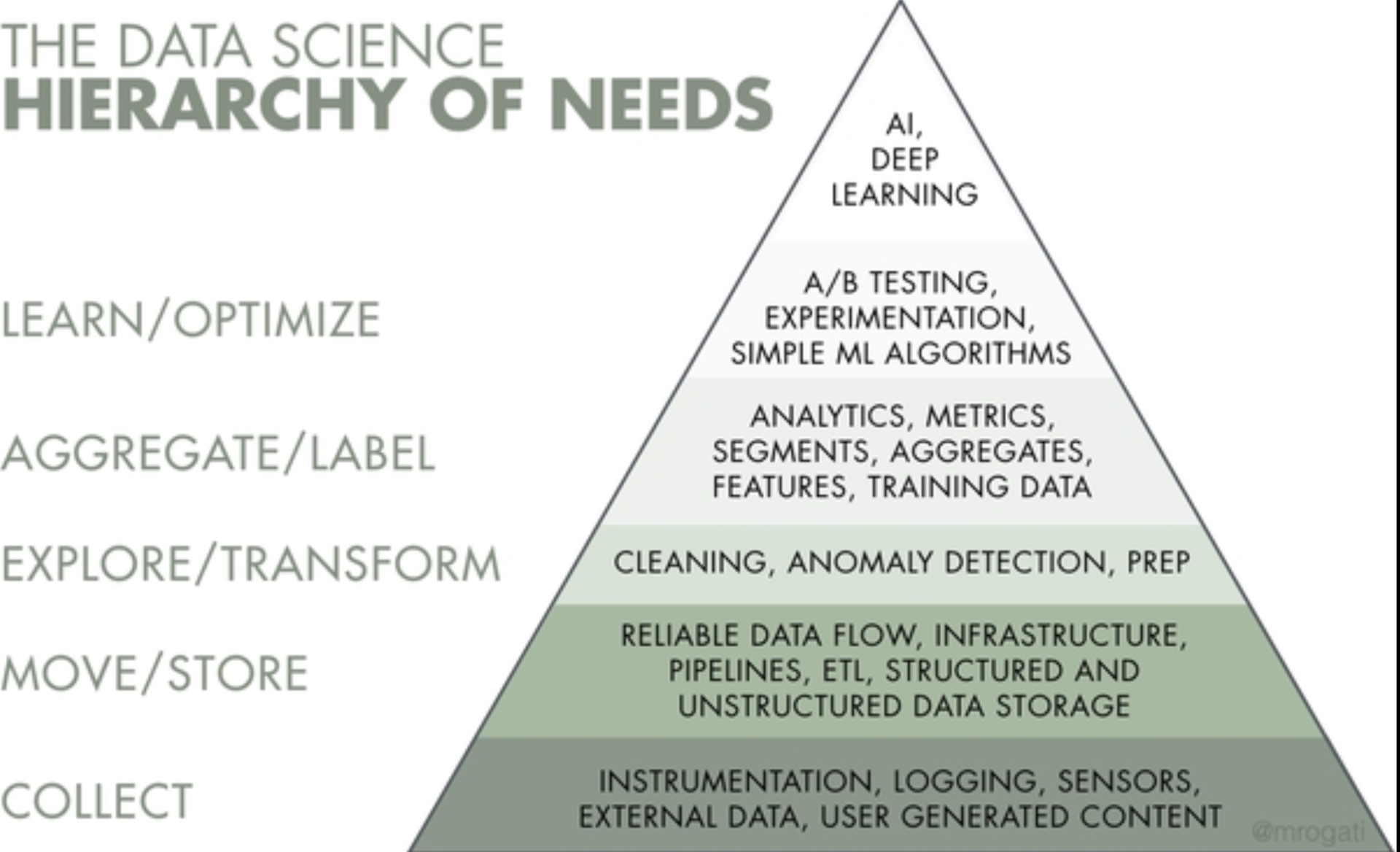

Whereas data engineers historically tended to the low-level details of monolithic frameworks such as Hadoop, Spark, or Informatica, the trend is moving toward decentralized, modularized, managed, and highly abstracted tools. Popular trends in the early 2020s include the modern data stack, representing a collection of off-the-shelf open source and third-party products assembled to make analysts’ lives easier.

With greater abstraction and simplification, a data lifecycle engineer is no longer encumbered by the gory details of yesterday’s big data frameworks. While data engineers maintain skills in low-level data programming and use these as required, they increasingly find their role focused on things higher in the value chain: security, data management, DataOps, data architecture, orchestration, and general data lifecycle management.

Instead of focusing on who has the “biggest data,” open source projects and services are increasingly concerned with managing and governing data, making it easier to use and discover, and improving its quality. Data engineers managing the data engineering lifecycle have better tools and techniques than ever before.

Data Engineering and Data Science

Data scientists aren’t typically trained to engineer production-grade data systems, and they end up doing this work haphazardly because they lack the support and resources of a data engineer.

Data Engineering Skills and Activities

The skill set of a data engineer encompasses the “undercurrents” of data engineering:

Security

Data Management

DataOps

Data Architecture

Software Engineering.

This skill set requires an understanding of how to evaluate data tools and how they fit together across the data engineering lifecycle. It’s also critical to know how data is produced in source systems and how analysts and data scientists will consume and create value after processing and curating data. Finally, a data engineer juggles a lot of complex moving parts and must constantly optimize along the axes of:

Cost

Agility

Scalability

Simplicity

Reuse

Interoperability

The data engineer is also expected to create agile data architectures that evolve as new trends emerge.

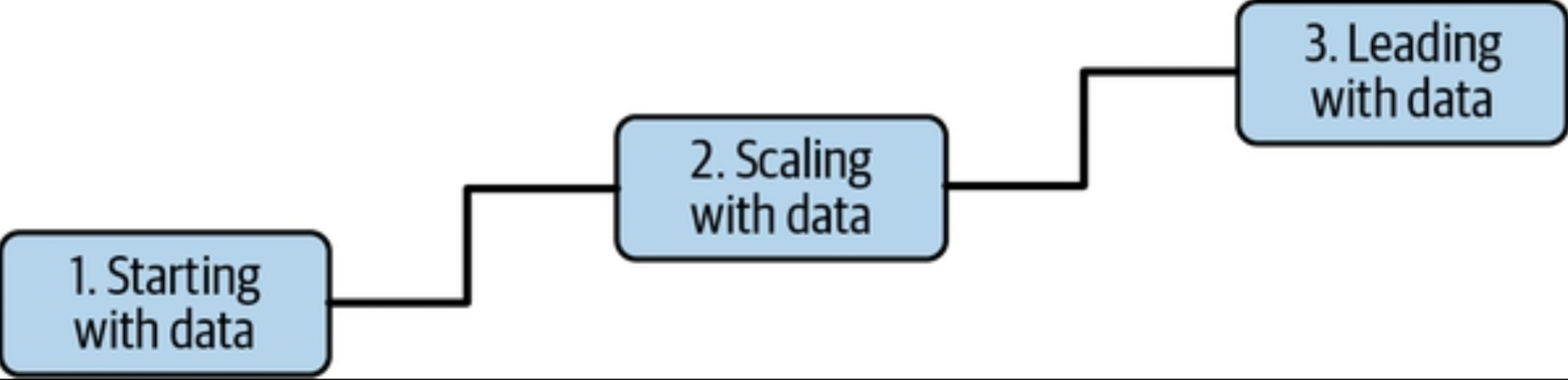

Data Maturity and the Data Engineer

Data maturity is the progression toward higher data utilization, capabilities, and integration across the organization, but data maturity does not simply depend on the age or revenue of a company. An early-stage startup can have greater data maturity than a 100-year-old company with annual revenues in the billions. What matters is the way data is leveraged as a competitive advantage.

Stage 1: Starting with Data

A data engineer should focus on the following in organizations getting started with data:

Get buy-in from key stakeholders, including executive management. Ideally, the data engineer should have a sponsor for critical initiatives to design and build a data architecture to support the company’s goals.

Define the right data architecture (usually solo, since a data architect likely isn’t available). This means determining business goals and the competitive advantage you’re aiming to achieve with your data initiative. Work toward a data architecture that supports these goals.

Identify and audit data that will support key initiatives and operate within the data architecture you designed.

Build a solid data foundation for future data analysts and data scientists to generate reports and models that provide competitive value. In the meantime, you may also have to generate these reports and models until this team is hired.

Pitfalls:

Just keep in mind that quick wins will likely create technical debt. Have a plan to reduce this debt, as it will otherwise add friction for future delivery.

Build custom solutions and code only where this creates a competitive advantage.

Stage 2: Scaling with Data

In organizations that are in stage 2 of data maturity, a data engineer’s goals are to do the following:

Establish formal data practices

Create scalable and robust data architectures

Adopt DevOps and DataOps practices

Build systems that support ML

Continue to avoid undifferentiated heavy lifting and customize only when a competitive advantage results

Issues to watch out for include the following:

As we grow more sophisticated with data, there’s a temptation to adopt bleeding-edge technologies based on social proof from Silicon Valley companies. This is rarely a good use of your time and energy. Any technology decisions should be driven by the value they’ll deliver to your customers.

The main bottleneck for scaling is not cluster nodes, storage, or technology but the data engineering team. Focus on solutions that are simple to deploy and manage to expand your team’s throughput.

You’ll be tempted to frame yourself as a technologist, a data genius who can deliver magical products. Shift your focus instead to pragmatic leadership and begin transitioning to the next maturity stage; communicate with other teams about the practical utility of data. Teach the organization how to consume and leverage data.

Stage 3: Leading with Data

In organizations in stage 3 of data maturity, a data engineer will continue building on prior stages, plus they will do the following:

Create automation for the seamless introduction and usage of new data

Focus on building custom tools and systems that leverage data as a competitive advantage

Focus on the “enterprisey” aspects of data, such as data management (including data governance and quality) and DataOps

Deploy tools that expose and disseminate data throughout the organization, including data catalogs, data lineage tools, and metadata management systems

Collaborate efficiently with software engineers, ML engineers, analysts, and others

Create a community and environment where people can collaborate and speak openly, no matter their role or position

Issues to watch out for include the following:

At this stage, complacency is a significant danger. Once organizations reach stage 3, they must constantly focus on maintenance and improvement or risk falling back to a lower stage.

Technology distractions are a more significant danger here than in the other stages. There’s a temptation to pursue expensive hobby projects that don’t deliver value to the business. Utilize custom-built technology only where it provides a competitive advantage.

Background and Skills of a Data Engineer

Data engineering is a fast-growing field, and a lot of questions remain about how to become a data engineer. Because data engineering is a relatively new discipline, little formal training is available to enter the field. By definition, a data engineer must understand both data and technology.

Business Responsibilities

Know how to communicate with nontechnical and technical people. Communication is key, and you need to be able to establish rapport and trust with people across the organization. We suggest paying close attention to organizational hierarchies, who reports to whom, how people interact, and which silos exist. These observations will be invaluable to your success.

Understand how to scope and gather business and product requirements. You need to know what to build and ensure that your stakeholders agree with your assessment. In addition, develop a sense of how data and technology decisions impact the business.

Understand the cultural foundations of Agile, DevOps, and DataOps. Many technologists mistakenly believe these practices are solved through technology. We feel this is dangerously wrong. Agile, DevOps, and DataOps are fundamentally cultural, requiring buy-in across the organization.

Control costs. You’ll be successful when you can keep costs low while providing outsized value. Know how to optimize for time to value, the total cost of ownership, and opportunity cost. Learn to monitor costs to avoid surprises.

Learn continuously. The data field feels like it’s changing at light speed. People who succeed in it are great at picking up new things while sharpening their fundamental knowledge. They’re also good at filtering, determining which new developments are most relevant to their work, which are still immature, and which are just fads. Stay abreast of the field and learn how to learn.

A successful data engineer always zooms out to understand the big picture and how to achieve outsized value for the business.

Techinical Responsibilities

You must understand how to build architectures that optimize performance and cost at a high level, using prepackaged or homegrown components. Recall the data engineering lifecycle are as follows:

Generation

Storage

Ingestion

Transformation

Serving

The data engineering lifecycle also has a notion of undercurrents—critical ideas across the entire lifecycle. These include:

Security

Data Management

DataOps

Data Architecture

Orchestration

Software Engineering

A data engineer should have production-grade software engineering chops. Even in a more abstract world, software engineering best practices provide a competitive advantage, and data engineers who can dive into the deep architectural details of a codebase give their companies an edge when specific technical needs arise.

A Data Engineer should know:

SQL

Python

JVM Languages, such as Java or Scala (for Spark, Hive, Druid, Kafka, etc..)

bash

Data engineers may also need to develop proficiency in secondary programming languages, including R, JavaScript, Go, Rust, C/C++, C#, and Julia. Focus on the fundamentals to understand what’s not going to change; pay attention to ongoing developments to know where the field is going.

The Continuum of Data Engineer Roles, from A to B

Data maturity is a helpful guide to understanding the types of data challenges a company will face as it grows its data capability.

The two types of Data Engineers:

Type A data engineers A stands for abstraction. In this case, the data engineer avoids undifferentiated heavy lifting, keeping data architecture as abstract and straightforward as possible and not reinventing the wheel. Type A data engineers manage the data engineering lifecycle mainly by using entirely off-the-shelf products, managed services, and tools. Type A data engineers work at companies across industries and at all levels of data maturity.

Type B data engineers B stands for build. Type B data engineers build data tools and systems that scale and leverage a company’s core competency and competitive advantage. In the data maturity range, a type B data engineer is more commonly found at companies in stage 2 and 3 (scaling and leading with data), or when an initial data use case is so unique and mission-critical that custom data tools are required to get started.

Data Engineers Inside an Organization

Internal-Facing Versus External-Facing Data Engineers

An external-facing data engineer typically aligns with the users of external-facing applications, such as social media apps, Internet of Things (IoT) devices, and ecommerce platforms. External-facing query engines often handle much larger concurrency loads than internal-facing systems.

An internal-facing data engineer typically focuses on activities crucial to the needs of the business and internal stakeholders. External-facing and internal-facing responsibilities are often blended. In practice, internal-facing data is usually a prerequisite to external-facing data.

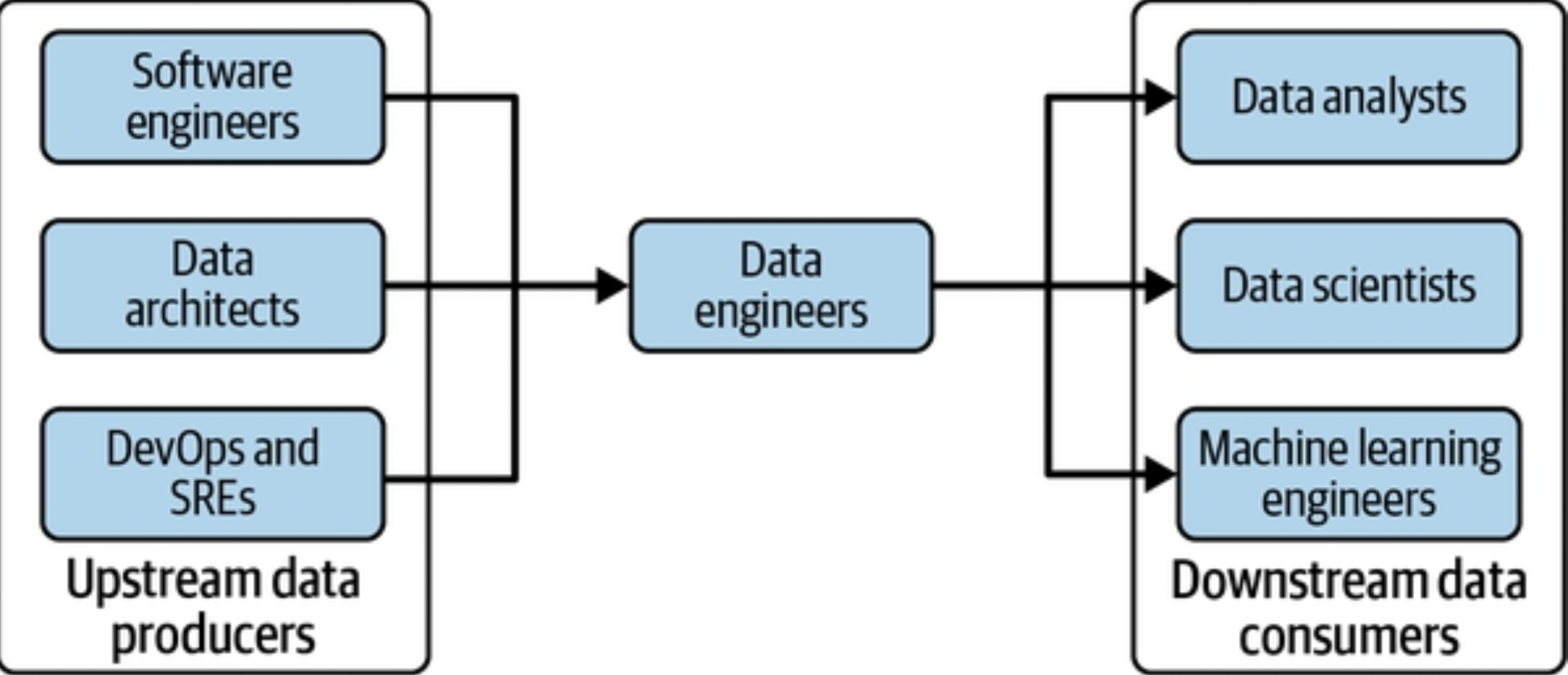

Data Engineers and Other Technical Roles

The data engineer is a hub between data producers, such as software engineers, data architects, and DevOps or site-reliability engineers (SREs), and data consumers, such as data analysts, data scientists, and ML engineers.

Upstream Stakeholders

To be successful as a data engineer, you need to understand the data architecture you’re using or designing and the source systems producing the data you’ll need.

Data architects function at a level of abstraction one step removed from data engineers. Data architects design the blueprint for organizational data management, mapping out processes and overall data architecture and systems. Data architects implement policies for managing data across silos and business units, steer global strategies such as data management and data governance, and guide significant initiatives.

Software Engineers build the software and systems that run a business; they are largely responsible for generating the internal data that data engineers will consume and process.

DevOps and SREs often produce data through operational monitoring.

Downstream Stakeholders

Data engineering exists to serve downstream data consumers and use cases.

Data scientists build forward-looking models to make predictions and recommendations. According to common industry folklore, data scientists spend 70% to 80% of their time collecting, cleaning, and preparing data.12 In our experience, these numbers often reflect immature data science and data engineering practices.

Data analysts (or business analysts) seek to understand business performance and trends. Whereas data scientists are forward-looking, a data analyst typically focuses on the past or present.

Machine learning engineers (ML engineers) overlap with data engineers and data scientists. ML engineers develop advanced ML techniques, train models, and design and maintain the infrastructure running ML processes in a scaled production environment. ML engineers often have advanced working knowledge of ML and deep learning techniques and frameworks such as PyTorch or TensorFlow. The boundaries between ML engineering, data engineering, and data science are blurry. The world of ML engineering is snowballing and parallels a lot of the same developments occurring in data engineering.