Notes from "Fundamentals of Data Engineering": The Data Engineering Lifecycle

The Data Engineering Lifecycle

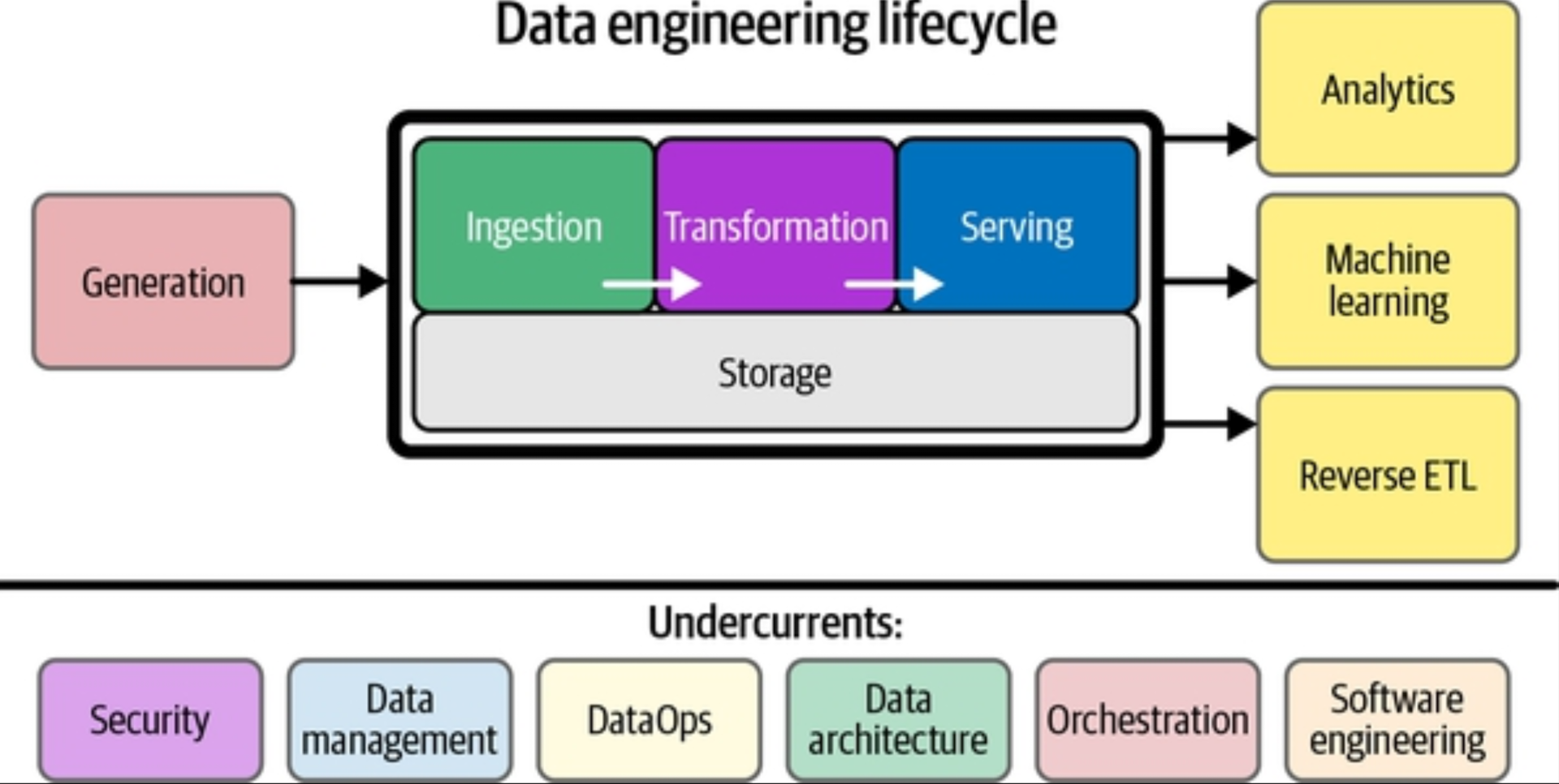

Because of increased technical abstraction, data engineers will increasingly become data lifecycle engineers, thinking and operating in terms of the principles of data lifecycle management. The data engineering lifecycle is our framework describing “cradle to grave” data engineering.

What is the Data Engineering Lifecycle

We divide the data engineering lifecycle into five stages:

Generation

Storage

Ingestion

Transformation

Serving data

We begin the data engineering lifecycle by getting data from source systems and storing it. Next, we transform the data and then proceed to our central goal, serving data to analysts, data scientists, ML engineers, and others. In general, the middle stages—storage, ingestion, transformation—can get a bit jumbled.

The Data Lifecycle Versus the Data Engineering Lifecycle

The data engineering lifecycle is a subset of the whole data lifecycle (Figure 2-2). Whereas the full data lifecycle encompasses data across its entire lifespan, the data engineering lifecycle focuses on the stages a data engineer controls.

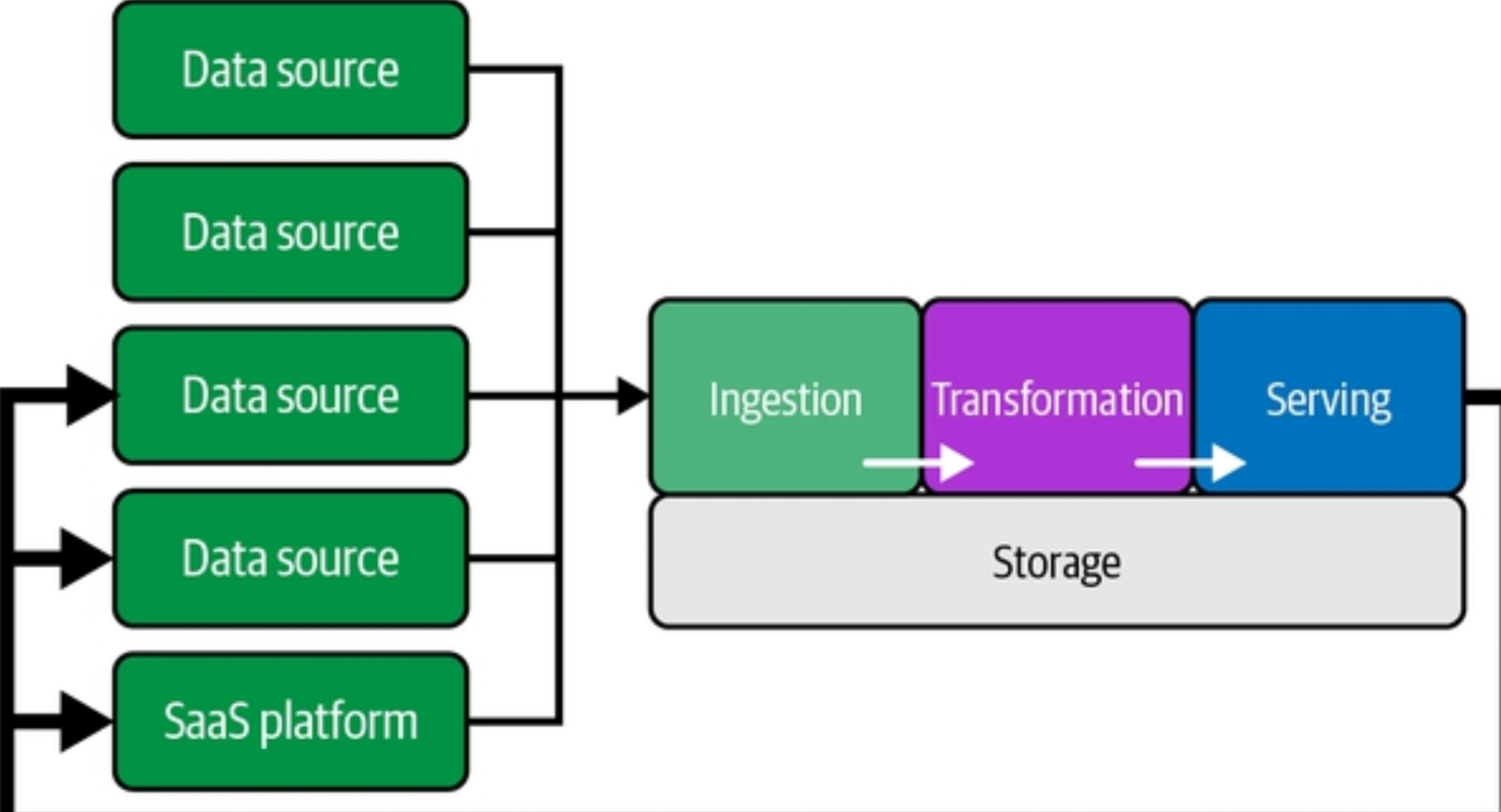

Generation: Source Systems

A source system is the origin of the data used in the data engineering lifecycle. A data engineer consumes data from a source system but doesn’t typically own or control the source system itself. The data engineer needs to have a working understanding of the way source systems work, the way they generate data, the frequency and velocity of the data, and the variety of data they generate.

Evaluating Source Systems: Key Engineering Considerations

There are many things to consider when assessing source systems, including how the system handles ingestion, state, and data generation.

What are the essential characteristics of the data source? Is it an application? A swarm of IoT devices?

How is data persisted in the source system? Is data persisted long term, or is it temporary and quickly deleted?

At what rate is data generated? How many events per second? How many gigabytes per hour?

What level of consistency can data engineers expect from the output data? If you’re running data-quality checks against the output data, how often do data inconsistencies occur—nulls where they aren’t expected, lousy formatting, etc.?

How often do errors occur?

Will the data contain duplicates?

Will some data values arrive late, possibly much later than other messages produced simultaneously?

What is the schema of the ingested data? Will data engineers need to join across several tables or even several systems to get a complete picture of the data?

If schema changes (say, a new column is added), how is this dealt with and communicated to downstream stakeholders?

How frequently should data be pulled from the source system?

For stateful systems (e.g., a database tracking customer account information), is data provided as periodic snapshots or update events from change data capture (CDC)?

What’s the logic for how changes are performed, and how are these tracked in the source database?

Who/what is the data provider that will transmit the data for downstream consumption?

Will reading from a data source impact its performance?

Does the source system have upstream data dependencies? What are the characteristics of these upstream systems?

Are data-quality checks in place to check for late or missing data?

A data engineer should know how the source generates data, including relevant quirks or nuances. The schema defines the hierarchical organization of data. Schemaless doesn’t mean the absence of schema. Rather, it means that the application defines the schema as data is written, whether to a message queue, a flat file, a blob, or a document database such as MongoDB. A more traditional model built on relational database storage uses a fixed schema enforced in the database, to which application writes must conform.

Storage

First, data architectures in the cloud often leverage several storage solutions. Second, few data storage solutions function purely as storage, with many supporting complex transformation queries; even object storage solutions may support powerful query capabilities—e.g., Amazon S3 Select. Third, while storage is a stage of the data engineering lifecycle, it frequently touches on other stages, such as ingestion, transformation, and serving.

Storage runs across the entire data engineering lifecycle, often occurring in multiple places in a data pipeline, with storage systems crossing over with source systems, ingestion, transformation, and serving. In many ways, the way data is stored impacts how it is used in all of the stages of the data engineering lifecycle.

Evaluating Storage Systems: Key Engineering Considerations

Here are a few key engineering questions to ask when choosing a storage system for a data warehouse, data lakehouse, database, or object storage:

Is this storage solution compatible with the architecture’s required write and read speeds?

Will storage create a bottleneck for downstream processes? Do you understand how this storage technology works? Are you utilizing the storage system optimally or committing unnatural acts? For instance, are you applying a high rate of random access updates in an object storage system? (This is an antipattern with significant performance overhead.)

Will this storage system handle anticipated future scale? You should consider all capacity limits on the storage system: total available storage, read operation rate, write volume, etc.

Will downstream users and processes be able to retrieve data in the required service-level agreement (SLA)?

Are you capturing metadata about schema evolution, data flows, data lineage, and so forth? Metadata has a significant impact on the utility of data. Metadata represents an investment in the future, dramatically enhancing discoverability and institutional knowledge to streamline future projects and architecture changes.

Is this a pure storage solution (object storage), or does it support complex query patterns (i.e., a cloud data warehouse)?

Is the storage system schema-agnostic (object storage)? Flexible schema (Cassandra)? Enforced schema (a cloud data warehouse)?

How are you tracking master data, golden records data quality, and data lineage for data governance? (We have more to say on these in “Data Management”.)

How are you handling regulatory compliance and data sovereignty? For example, can you store your data in certain geographical locations but not others?

Understanding Data Access Frequency

Data that is most frequently accessed is called hot data. Hot data is commonly retrieved many times per day, perhaps even several times per second—for example, in systems that serve user requests. This data should be stored for fast retrieval, where “fast” is relative to the use case. Lukewarm data might be accessed every so often—say, every week or month. Cold data is seldom queried and is appropriate for storing in an archival system. Cold data is often retained for compliance purposes or in case of a catastrophic failure in another system.

Selecting a Storage System

This depends on your use cases, data volumes, frequency of ingestion, format, and size of the data being ingested—essentially, the key considerations listed in the preceding bulleted questions. There is no one-size-fits-all universal storage recommendation.

Ingestion

Source systems and ingestion represent the most significant bottlenecks of the data engineering lifecycle.

Key Engineering Considerations for the Ingestion Phase

When preparing to architect or build a system, here are some primary questions about the ingestion stage:

What are the use cases for the data I’m ingesting? Can I reuse this data rather than create multiple versions of the same dataset?

Are the systems generating and ingesting this data reliably, and is the data available when I need it?

What is the data destination after ingestion?

How frequently will I need to access the data?

In what volume will the data typically arrive? What format is the data in? Can my downstream storage and transformation systems handle this format?

Is the source data in good shape for immediate downstream use? If so, for how long, and what may cause it to be unusable?

If the data is from a streaming source, does it need to be transformed before reaching its destination? Would an in-flight transformation be appropriate, where the data is transformed within the stream itself?

Batch Versus Streaming

Adopt true real-time streaming only after identifying a business use case that justifies the trade-offs against using batch.

Virtually all data we deal with is inherently streaming. Data is nearly always produced and updated continually at its source. Batch ingestion is simply a specialized and convenient way of processing this stream in large chunks—for example, handling a full day’s worth of data in a single batch.

Real-time (or near real-time) means that the data is available to a downstream system a short time after it is produced (e.g., less than one second later). Batch data is ingested either on a predetermined time interval or as data reaches a preset size threshold. Batch ingestion is a one-way door: once data is broken into batches, the latency for downstream consumers is inherently constrained.

Key Considerations for Batch versus Stream Ingestion

The following are some questions to ask yourself when determining whether streaming ingestion is an appropriate choice over batch ingestion:

If I ingest the data in real time, can downstream storage systems handle the rate of data flow?

Do I need millisecond real-time data ingestion? Or would a micro-batch approach work, accumulating and ingesting data, say, every minute?

What are my use cases for streaming ingestion? What specific benefits do I realize by implementing streaming? If I get data in real time, what actions can I take on that data that would be an improvement upon batch?

Will my streaming-first approach cost more in terms of time, money, maintenance, downtime, and opportunity cost than simply doing batch?

Are my streaming pipeline and system reliable and redundant if infrastructure fails?

What tools are most appropriate for the use case? Should I use a managed service (Amazon Kinesis, Google Cloud Pub/Sub, Google Cloud Dataflow) or stand up my own instances of Kafka, Flink, Spark, Pulsar, etc.? If I do the latter, who will manage it? What are the costs and trade-offs?

If I’m deploying an ML model, what benefits do I have with online predictions and possibly continuous training?

Am I getting data from a live production instance? If so, what’s the impact of my ingestion process on this source system?

Push versus Pull

In the push model of data ingestion, a source system writes data out to a target, whether a database, object store, or filesystem. In the pull model, data is retrieved from the source system.

Transformation

After you’ve ingested and stored data, you need to do something with it. The next stage of the data engineering lifecycle is transformation, meaning data needs to be changed from its original form into something useful for downstream use cases. Without proper transformations, data will sit inert, and not be in a useful form for reports, analysis, or ML.

Key Considerations for the Transformation Phase

When considering data transformations within the data engineering lifecycle, it helps to consider the following:

What’s the cost and return on investment (ROI) of the transformation? What is the associated business value?

Is the transformation as simple and self-isolated as possible?

What business rules do the transformations support?

Logically, we treat transformation as a standalone area of the data engineering lifecycle, but the realities of the lifecycle can be much more complicated in practice. Transformation is often entangled in other phases of the lifecycle. Business logic is a major driver of data transformation, often in data modeling.

Serving Data

Data has value when it’s used for practical purposes. Data that is not consumed or queried is simply inert. Data vanity projects are a major risk for companies. Many companies pursued vanity projects in the big data era, gathering massive datasets in data lakes that were never consumed in any useful way.

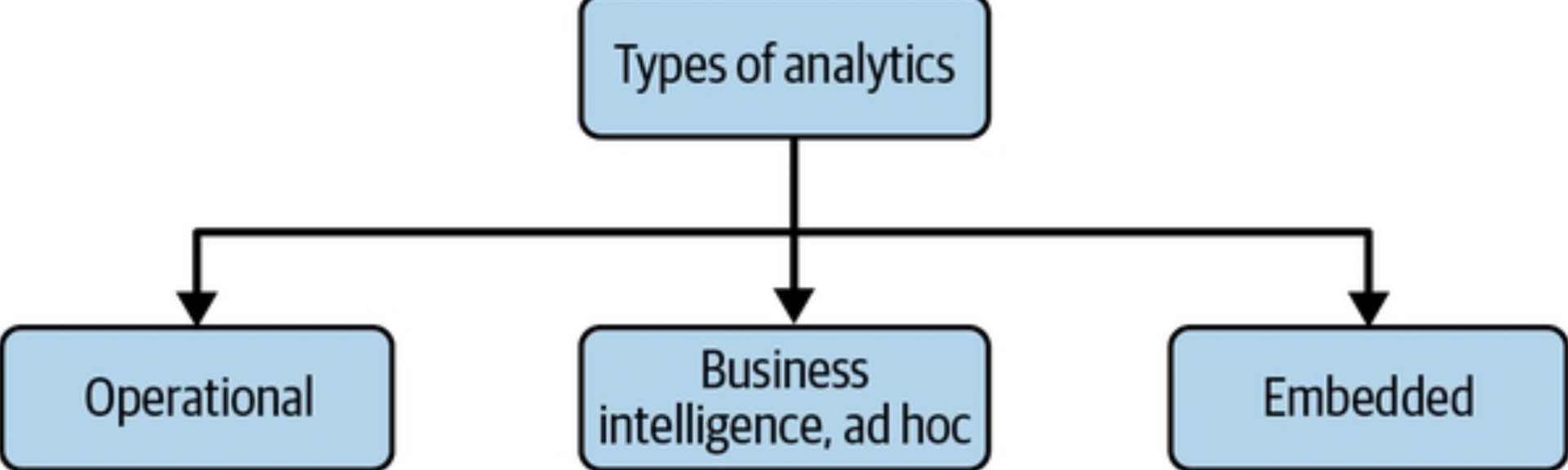

Analytics

Analytics is the core of most data endeavors. Once your data is stored and transformed, you’re ready to generate reports or dashboards and do ad hoc analysis on the data.

Business Intelligence

BI marshals collected data to describe a business’s past and current state. BI requires using business logic to process raw data. Note that data serving for analytics is yet another area where the stages of the data engineering lifecycle can get tangled. As we mentioned earlier, business logic is often applied to data in the transformation stage of the data engineering lifecycle, but a logic-on-read approach has become increasingly popular. Data is stored in a clean but fairly raw form, with minimal postprocessing business logic. A BI system maintains a repository of business logic and definitions.

Although self-service analytics is simple in theory, it’s tough to pull off in practice. The main reason is that poor data quality, organizational silos, and a lack of adequate data skills often get in the way of allowing widespread use of analytics.

Operational Analytics

Operational analytics focuses on the fine-grained details of operations, promoting actions that a user of the reports can act upon immediately.

Embedded Analytics

With embedded analytics, the request rate for reports, and the corresponding burden on analytics systems, goes up dramatically; access control is significantly more complicated and critical. Businesses may be serving separate analytics and data to thousands or more customers. Each customer must see their data and only their data.

Machine Learning

The responsibilities of data engineers overlap significantly in analytics and ML, and the boundaries between data engineering, ML engineering, and analytics engineering can be fuzzy. For example, a data engineer may need to support Spark clusters that facilitate analytics pipelines and ML model training. They may also need to provide a system that orchestrates tasks across teams and support metadata and cataloging systems that track data history and lineage. Setting these domains of responsibility and the relevant reporting structures is a critical organizational decision.

The feature store is a recently developed tool that combines data engineering and ML engineering. Feature stores are designed to reduce the operational burden for ML engineers by maintaining feature history and versions, supporting feature sharing among teams, and providing basic operational and orchestration capabilities, such as backfilling.

The following are some considerations for the serving data phase specific to ML:

Is the data of sufficient quality to perform reliable feature engineering? Quality requirements and assessments are developed in close collaboration with teams consuming the data.

Is the data discoverable? Can data scientists and ML engineers easily find valuable data?

Where are the technical and organizational boundaries between data engineering and ML engineering? This organizational question has significant architectural implications.

Does the dataset properly represent ground truth? Is it unfairly biased?

Reverse ETL

Reverse ETL takes processed data from the output side of the data engineering lifecycle and feeds it back into source systems. In reality, this flow is beneficial and often necessary; reverse ETL allows us to take analytics, scored models, etc., and feed these back into production systems or SaaS platforms.

Major Undercurrents Across the Data Engineering Lifecycle

Data engineering now encompasses far more than tools and technology. The field is now moving up the value chain, incorporating traditional enterprise practices such as data management and cost optimization and newer practices like DataOps.

Security

Data engineers must understand both data and access security, exercising the principle of least privilege. People and organizational structure are always the biggest security vulnerabilities in any company. Data security is also about timing—providing data access to exactly the people and systems that need to access it and only for the duration necessary to perform their work.

Data Management

Data management is the development, execution, and supervision of plans, policies, programs, and practices that deliver, control, protect, and enhance the value of data and information assets throughout their lifecycle. Data management has quite a few facets, including the following:

Data governance, including discoverability and accountability

Data modeling and design

Data lineage

Storage and operations

Data integration and interoperability

Data lifecycle management

Data systems for advanced analytics and ML

Ethics and privacy

Data Governance

Data governance is, first and foremost, a data management function to ensure the quality, integrity, security, and usability of the data collected by an organization.

Discoverability - In a data-driven company, data must be available and discoverable.

Metadata - In a data-driven company, data must be available and discoverable.

DMBOK (Data Management Body of Knowledge) identifies four main categories of metadata that are useful to data engineers:

Business metadata - Business metadata relates to the way data is used in the business, including business and data definitions, data rules and logic, how and where data is used, and the data owner(s). A data engineer uses business metadata to answer nontechnical questions about who, what, where, and how. For example, a data engineer may be tasked with creating a data pipeline for customer sales analysis.

Technical metadata - Technical metadata describes the data created and used by systems across the data engineering lifecycle. Here are some common types of technical metadata that a data engineer will use:

Pipeline metadata (often produced in orchestration systems) - Pipeline metadata captured in orchestration systems provides details of the workflow schedule, system and data dependencies, configurations, connection details, and much more.

Data lineage - Data-lineage metadata tracks the origin and changes to data, and its dependencies, over time.

Schema - Schema metadata describes the structure of data stored in a system such as a database, a data warehouse, a data lake, or a filesystem; it is one of the key differentiators across different storage systems.

Operational metadata - Operational metadata describes the operational results of various systems and includes statistics about processes, job IDs, application runtime logs, data used in a process, and error logs. A data engineer uses operational metadata to determine whether a process succeeded or failed and the data involved in the process.

Reference metadata - Reference metadata is data used to classify other data. This is also referred to as lookup data. Standard examples of reference data are internal codes, geographic codes, units of measurement, and internal calendar standards.

Data Accountability

Data accountability means assigning an individual to govern a portion of data. The responsible person then coordinates the governance activities of other stakeholders. Managing data quality is tough if no one is accountable for the data in question.

Data Quality

Data quality is the optimization of data toward the desired state and orbits the question, “What do you get compared with what you expect?” Data should conform to the expectations in the business metadata. Does the data match the definition agreed upon by the business?

According to Data Governance: The Definitive Guide, data quality is defined by three main characteristics:

Accuracy - Is the collected data factually correct? Are there duplicate values? Are the numeric values accurate?

Completeness - Are the records complete? Do all required fields contain valid values?

Timeliness - Are records available in a timely fashion?

Master data is data about business entities such as employees, customers, products, and locations. Master data management (MDM) is the practice of building consistent entity definitions known as golden records. Golden records harmonize entity data across an organization and with its partners.

Data Modeling and Design

To derive business insights from data, through business analytics and data science, the data must be in a usable form. The process for converting data into a usable form is known as data modeling and design. With the wide variety of data that engineers must cope with, there is a temptation to throw up our hands and give up on data modeling. This is a terrible idea with harrowing consequences, made evident when people murmur of the write once, read never (WORN) access pattern or refer to a data swamp.

Data Lineage

Data lineage describes the recording of an audit trail of data through its lifecycle, tracking both the systems that process the data and the upstream data it depends on.

Data Integration and Interoperability

Data integration and interoperability is the process of integrating data across tools and processes. While the complexity of interacting with data systems has decreased, the number of systems and the complexity of pipelines has dramatically increased. Engineers starting from scratch quickly outgrow the capabilities of bespoke scripting and stumble into the need for orchestration.

Data Lifecycle Management

Two changes have encouraged engineers to pay more attention to what happens at the end of the data engineering lifecycle. First, data is increasingly stored in the cloud. Second, privacy and data retention laws such as the GDPR and the CCPA require data engineers to actively manage data destruction to respect users’ “right to be forgotten.”

Ethics and Privacy

The last several years of data breaches, misinformation, and mishandling of data make one thing clear: data impacts people. Data engineers need to ensure that datasets mask personally identifiable information (PII) and other sensitive information; bias can be identified and tracked in datasets as they are transformed. Regulatory requirements and compliance penalties are only growing. Ensure that your data assets are compliant with a growing number of data regulations, such as GDPR and CCPA.

DataOps

DataOps maps the best practices of Agile methodology, DevOps, and statistical process control (SPC) to data. Whereas DevOps aims to improve the release and quality of software products, DataOps does the same thing for data products. A data product is built around sound business logic and metrics, whose users make decisions or build models that perform automated actions.

Like DevOps, DataOps borrows much from lean manufacturing and supply chain management, mixing people, processes, and technology to reduce time to value. As Data Kitchen (experts in DataOps) describes it:

DataOps is a collection of technical practices, workflows, cultural norms, and architectural patterns that enable:

Rapid innovation and experimentation delivering new insights to customers with increasing velocity

Extremely high data quality and very low error rates

Collaboration across complex arrays of people, technology, and environments

Clear measurement, monitoring, and transparency of results

DataOps is a set of cultural habits; the data engineering team needs to adopt a cycle of communicating and collaborating with the business, breaking down silos, continuously learning from successes and mistakes, and rapid iteration. We suggest first starting with observability and monitoring to get a window into the performance of a system, then adding in automation and incident response.

DataOps has three core technical elements: automation, monitoring and observability, and incident response.

Automation

Automation enables reliability and consistency in the DataOps process and allows data engineers to quickly deploy new product features and improvements to existing workflows. DevOps, DataOps practices monitor and maintain the reliability of technology and systems (data pipelines, orchestration, etc.), with the added dimension of checking for data quality, data/model drift, metadata integrity, and more.

One of the tenets of the DataOps Manifesto is “Embrace change.” This does not mean change for the sake of change but rather goal-oriented change.

Observability and Monitoring

Observability, monitoring, logging, alerting, and tracing are all critical to getting ahead of any problems along the data engineering lifecycle. We recommend you incorporate SPC to understand whether events being monitored are out of line and which incidents are worth responding to. Petrella’s DODD (Data Observability Driven Development) method mentioned previously provides an excellent framework for thinking about data observability. DODD is much like test-driven development (TDD) in software engineering:

The purpose of DODD is to give everyone involved in the data chain visibility into the data and data applications so that everyone involved in the data value chain has the ability to identify changes to the data or data applications at every step—from ingestion to transformation to analysis—to help troubleshoot or prevent data issues. DODD focuses on making data observability a first-class consideration in the data engineering lifecycle.

Incident Response

A high-functioning data team using DataOps will be able to ship new data products quickly. But mistakes will inevitably happen. Incident response is about using the automation and observability capabilities mentioned previously to rapidly identify root causes of an incident and resolve it as reliably and quickly as possible.

DataOps Summary

Data engineers would do well to make DataOps practices a high priority in all of their work.

Data Architecture

A data architecture reflects the current and future state of data systems that support an organization’s long-term data needs and strategy. Because an organization’s data requirements will likely change rapidly, and new tools and practices seem to arrive on a near-daily basis, data engineers must understand good data architecture.

A data engineer should first understand the needs of the business and gather requirements for new use cases. Next, a data engineer needs to translate those requirements to design new ways to capture and serve data, balanced for cost and operational simplicity.

Orchestration

Orchestration is the process of coordinating many jobs to run as quickly and efficiently as possible on a scheduled cadence. For instance, people often refer to orchestration tools like Apache Airflow as schedulers.

Software Engineering

Software engineering has always been a central skill for data engineers.

Core Data Processing Code

It’s also imperative that a data engineer understand proper code-testing methodologies, such as unit, regression, integration, end-to-end, and smoke.

Development of Open Source Frameworks

Many data engineers are heavily involved in developing open source frameworks. They adopt these frameworks to solve specific problems in the data engineering lifecycle, and then continue developing the framework code to improve the tools for their use cases and contribute back to the community. Keep an eye on the total cost of ownership (TCO) and opportunity cost associated with implementing a tool.

Streaming

Streaming data processing is inherently more complicated than batch, and the tools and paradigms are arguably less mature. Engineers must also write code to apply a variety of windowing methods. Windowing allows real-time systems to calculate valuable metrics such as trailing statistics.

Infrastructure as Code

Infrastructure as code (IaC) applies software engineering practices to the configuration and management of infrastructure.

Pipeline as Code

Pipelines as code is the core concept of present-day orchestration systems, which touch every stage of the data engineering lifecycle. Data engineers use code (typically Python) to declare data tasks and dependencies among them. The orchestration engine interprets these instructions to run steps using available resources.